IEEE ICASSP Workshop

Explainable Machine Learning for Speech and Audio

15th April 2024 - Seoul, Korea

The first workshop on Explainable Machine Learning for Speech and Audio aims at fostering research in the field of interpretability for audio and speech processing with neural networks. The workshop focuses on fundamental and applied challenges to interpretability in the audio domain, tackling formal descriptions of interpretability, model evaluation strategies, and novel interpretability techniques for explaining neural network predictions.

Overview

Deep learning has revolutionized the fields of speech and audio. However, for the most part, the neural-network models employed in the field remain black boxes which are opaque and hard to interpret. This lack of transparency hinders trust in these models and limits the adoption of audio-based AI systems in critical domains such as healthcare and forensics. Also, explanation methods and interpretable models hold potential for improving audio applications in information retrieval domains such as music genre classification and recommendation systems.

Explanation methods such as posthoc-explanation models aim to provide human-understandable interpretations in order to justify why a trained classifier makes a decision. Specifically, these methods aim to answer the question “Why does this particular input lead to that particular output?”. In the audio domain, these explanations are typically presented as highlights on the spectrogram or as listenable outputs that emphasize the elements in the input responsible for the classification. Examples of interpretability applications in the audio domain include methods such as layer-wise relevance propagation [3, 2, 9, 1, 5], guided-backpropagation [10], LIME-based methods [8, 7, 6, 4, 13], and concept-based methods [12, 11].

There is also another family of approaches that aim to construct models that are explainable by design. This category of models include the classical Non-Negative Matrix Factorization [14], using self-attention to obtain attention masks [15], and using prototypical networks as interpretable network designs [16, 17]. The downside of these approaches is that, typically, the model design sacrifices performance in favor of model interpretability.

In order to initiate discussions on the state-of-the-art pertaining to aforementioned approaches from the literature, and to explore questions surrounding the expectations for the provided model explanations, in this workshop we aim to address the following questions:

What are some pertinent real-life use cases for neural network interpretation methods for audio and speech?

What are the best approaches to create neural network interpretations in the audio do- main without sacrificing classifier performance? Which applications might favor posthoc interpretation methods over interpretable designs? (or vice-versa)

For speech and audio applications, what are some of the most successful methods for generating explanations? What explanation modalities were used (e.g., highlighting spectrograms, listenable interpretations, creating text), and what is the impact of the explanation modality on the quality of explanations?

How do we evaluate interpretation quality in the audio domain? How meaningful are the current quantitative metrics for evaluating explanations? What are the best practices for qualitative evaluations?

How can we measure the reliability of interpretations, especially for critical decision-making problems?

Can we come up with analysis and visualization methods to render large blackboxes such as ASR models more interpretable and consequently more robust?

Can we leverage insights and experiences from interpretability techniques developed for other data modalities, such as images and text, into the audio domain?

For some details and to submit a manuscript, please refer to the call for papers.

Invited Talks

Time: 9.15-10.00 KST

Title: A Recent (Important) Scientific Discovery Made by Explaining a Black Box and Replacing it with an Interpretable Neural Network

Abstract

Let us consider two questions: "Can interpretable deep learning help us make medical decisions?" and "Can we gain new medical knowledge with interpretable deep learning?" I will answer both questions in the affirmative for computer-aided mammography.

In the first part, I will discuss ProtoPNet, an interpretable deep learning approach for computer vision. ProtoPNet uses case based reasoning, where it compares a new test case to similar cases from the past ("this looks like that"). We have been applying ProtoPNet to computer-aided mammography, and I will discuss the challenges in this application domain. I will also briefly describe the ProtoConcepts algorithm, where each case is compared to a concept, represented by a set of images ("this looks like those").

In the second part, I will discuss a scientific discovery recently made from deep learning. Specifically, we have discovered that it is possible to predict breast cancer up to 5 years in advance from mammograms, which is a task that radiologists do not do. We made this discovery by analyzing the Mirai algorithm of Yala et al., a recent black box deep learning model. While exploring Mirai, we discovered something surprising: Mirai’s predictions were almost entirely based on localized dissimilarities between left and right breasts. Guided by this insight, we showed that dissimilarity alone can predict whether a woman will develop breast cancer in the next five years, and developed an interpretable deep learning model (AsymMirai) that does just that. This is the first accurate and interpretable model for predicting breast cancer years in advance.

Bio

Cynthia Rudin is a professor of computer science, electrical and computer engineering, statistical science, and biostatistics & bioinformatics at Duke University, and directs the Interpretable Machine Learning Lab. Previously, Prof. Rudin held positions at MIT, Columbia, and NYU. She holds an undergraduate degree from the University at Buffalo, and a PhD from Princeton University. She is the recipient of the 2022 Squirrel AI Award for Artificial Intelligence for the Benefit of Humanity from the Association for the Advancement of Artificial Intelligence (AAAI). This award, similar only to world-renowned recognitions, such as the Nobel Prize and the Turing Award, carries a monetary reward at the million-dollar level. She is also a three-time winner of the INFORMS Innovative Applications in Analytics Award, was named as one of the "Top 40 Under 40" by Poets and Quants in 2015, and was named by Businessinsider.com as one of the 12 most impressive professors at MIT in 2015. She is a fellow of the American Statistical Association and a fellow of the Institute of Mathematical Statistics.

She is past chair of both the INFORMS Data Mining Section and the Statistical Learning and Data Science Section of the American Statistical Association. She has also served on committees for DARPA, the National Institute of Justice, AAAI, and ACM SIGKDD. She has served on three committees for the National Academies of Sciences, Engineering and Medicine, including the Committee on Applied and Theoretical Statistics, the Committee on Law and Justice, and the Committee on Analytic Research Foundations for the Next-Generation Electric Grid. She has given keynote/invited talks at several conferences including KDD (twice), AISTATS, CODE, Machine Learning in Healthcare (MLHC), Fairness, Accountability and Transparency in Machine Learning (FAT-ML), ECML-PKDD, and the Nobel Conference. Her work has been featured in news outlets including the NY Times, Washington Post, Wall Street Journal, the Boston Globe, Businessweek, and NPR.

Time: 14.15-15.00 KST

Title: Toward Explainable Speech Foundation Models

Abstract

Speech foundation models are an active research area with the potential to consolidate various speech-processing tasks within a single model. A notable trend in this domain involves scaling up data volume, model size, and the range of tasks. This scaling trajectory has brought about significant changes in our research landscape, particularly regarding resource allocation. Notably, it has led to a division of research roles, where large tech companies primarily focus on building foundational models, while smaller entities, including academic institutions and smaller companies, concentrate on refining and analyzing these models. While this division has streamlined research efforts, there is a growing concern about the potential loss of explainability in these foundational models. This is primarily due to the limited transparency in the model-building process, often dictated by company policies. To address this concern, our group has started the development of large-scale speech foundation models. Our talk introduces Open Whisper-style Speech Models (OWSM), a series of speech foundation models developed at Carnegie Mellon University, reproducing OpenAI Whisper-style training using publicly available data and our open-source toolkit ESPnet. Crucially, our models exhibit several explainable behaviors thanks to the transparency inherent in our model-building process. In addition to showcasing the OWSM models, we discuss the related research efforts encompassing software development, data collection, cleaning, and model evaluation. Throughout this presentation, we would like to discuss how to address the research challenges posed by this shifting landscape within our speech and audio community.

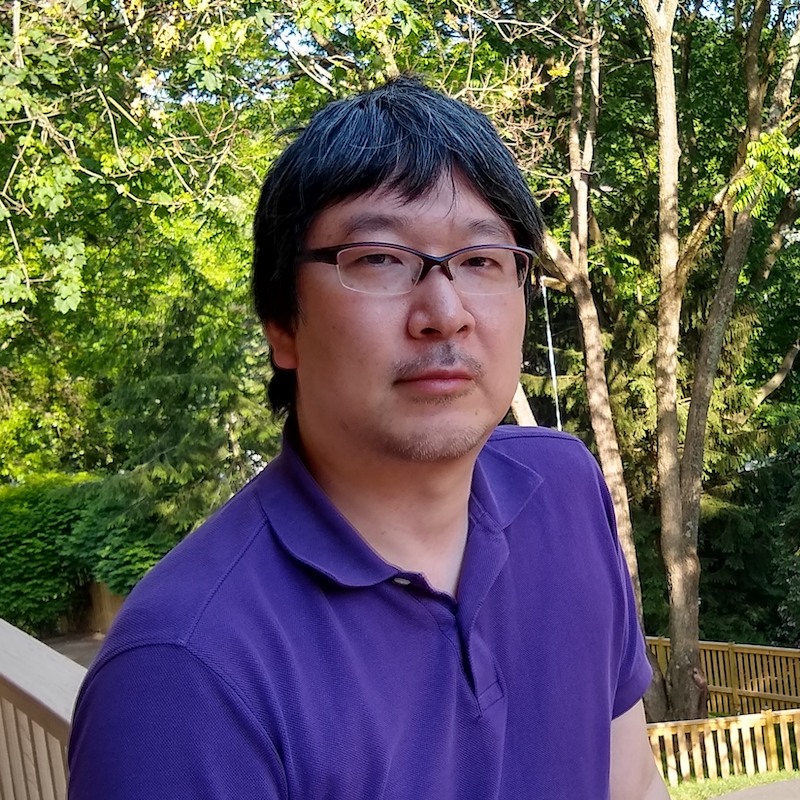

Bio

Shinji Watanabe is an Associate Professor at Carnegie Mellon University, Pittsburgh, PA. He received his B.S., M.S., and Ph.D. (Dr. Eng.) degrees from Waseda University, Tokyo, Japan. He was a research scientist at NTT Communication Science Laboratories, Kyoto, Japan, from 2001 to 2011, a visiting scholar at Georgia Institute of Technology, Atlanta, GA, in 2009, and a senior principal research scientist at Mitsubishi Electric Research Laboratories (MERL), Cambridge, MA USA from 2012 to 2017. Before Carnegie Mellon University, he was an associate research professor at Johns Hopkins University, Baltimore, MD, USA, from 2017 to 2020. His research interests include automatic speech recognition, speech enhancement, spoken language understanding, and machine learning for speech and language processing. He has published over 400 papers in peer-reviewed journals and conferences and received several awards, including the best paper award from the IEEE ASRU in 2019. He is a Senior Area Editor of the IEEE Transactions on Audio Speech and Language Processing. He was/has been a member of several technical committees, including the APSIPA Speech, Language, and Audio Technical Committee (SLA), IEEE Signal Processing Society Speech and Language Technical Committee (SLTC), and Machine Learning for Signal Processing Technical Committee (MLSP). He is an IEEE and ISCA Fellow.

Time: 11.15-12.00 KST

Title: Hybrid and Interpretable deep neural audio processing

Abstract

We will describe and illustrate a novel avenue for explainable and interpretable deep audio processing based on Hybrid deep learning. This paradigm refers here to models that associates data-driven and model-based approaches in a joint framework by integrating our prior knowledge about the data in simple and controllable models. In the speech or music domain, prior knowledge can relate to the production or propagation of sound (using an acoustic or physical model), the way sound is perceived (based on a perceptual model), or for music for instance how it is composed (using a musicological model). In this presentation, we will first illustrate the concept and potential of such model-based deep learning approaches and describe in more details its application to unsupervised singing voice separation, speech dereverberation and symbolic music generation.

Bio

Gaël Richard received the State Engineering degree from Telecom Paris, France in 1990, the Ph.D. degree and Habilitation from University of ParisSaclay respectively in 1994 and 2001. After the Ph.D. degree, he spent two years at Rutgers University, Piscataway, NJ, in the Speech Processing Group of Prof. J. Flanagan, where he explored innovative approaches for speech production. From 1997 to 2001, he successively worked for Matra, Bois d’Arcy, France, and for Philips, Montrouge, France. He then joined Telecom Paris, where he is now a Full Professor in audio signal processing. He is also the scientific co- director of the Hi! PARIS interdisciplinary center on AI and Data analytics for society. He is a coauthor of over 250 papers and inventor in 10 patents. His research interests include topics such as signal representations, source separation, machine learning methods for audio/music signals and music information retrieval. He received, in 2020, the Grand prize of IMT-National academy of science for his research contribution in sciences and technologies. He is a fellow member of the IEEE and the past Chair of the IEEE SPS Technical Committee for Audio and Acoustic Signal Processing. In 2022, he is awarded of an advanced ERC grant of the European Union for the project “HI-Audio: Hybrid and Interpretable deep neural audio machines”.

Time: 13.00-13.45 KST

Title: Understanding or regurgitating? Investigations into probing and training data memorization of audio generative models

Abstract

One of the more fascinating debates in the current hype cycle over large language models (LLMs) is whether LLMs merely copy and regurgitate their training data, or if they learn an underlying world model of human language. Given advancements in audio diffusion models and generative audio transformers, it is interesting to ask what these models know about audio. In the first part of this talk, I will share our recent progress attempting to detect and quantify training data memorization in a large text-to-audio diffusion model. I will then shift to our analysis of a large generative music transformer, where we use simple linear classifier probes to understand what this model knows about music. Finally, I will discuss how we can use these probes to steer the generative model in a desired direction without retraining, enabling more fine-grained interpretable controls compared to only text prompts.

Bio

Gordon Wichern is a Senior Principal Research Scientist at Mitsubishi Electric Research Laboratories (MERL) in Cambridge, Massachusetts. He received his B.Sc. and M.Sc. degrees from Colorado State University and his Ph.D. from Arizona State University. Prior to joining MERL, he was a member of the research team at iZotope, where he focused on applying novel signal processing and machine learning techniques to music and post-production software, and before that a member of the Technical Staff at MIT Lincoln Laboratory. He is the Chair of the AES Technical Committee on Machine Learning and Artificial Intelligence (TC-MLAI), and a member of the IEEE Audio and Acoustic Signal Processing Technical Committee (AASP-TC). His research interests span the audio signal processing and machine learning fields, with a recent focus on source separation and sound event detection.

Time: 8.45-9.15 KST

Title: The Spectrum of Interpretability for Music Machine Learning

Abstract

Explainability and interpretability play an important role in designing musical instruments that have engaging and rewarding playing experiences. As scaling laws become increasingly significant in the success of machine learning systems for musical audio, the "black-box" nature of these systems runs counter to the original ethos of musical instruments. In this talk, I will outline some projects where the black box has been cracked open in an effort to add more structure to music machine learning systems. These works span the spectrum of interpretability. On one end is MIDI-DDSP, which has an extreme amount of interpretability, by design; while this leads to an impressively expressive generative model, its intrinsic interpretability limits its ability to generalize. In the middle of the spectrum are methods that aim to add hierarchical structure to a learned embedding space for separation and classification. At the far end of the spectrum is audioLIME, a post-hoc, model-agnostic method for local explanations using separated sources. Finally, I will touch on future opportunities for explainable music machine learning systems in the age of generative modeling.

Bio

Ethan is a Research Scientist on the Magenta Team, where he works on making machine learning systems that can listen to and understand musical audio in an effort to make tools that can better assist artists. He did his PhD at Northwestern University and earned BS in Physics and a BFA in Jazz Guitar at the University of Michigan. He currently lives in Chicago, where he spends his free time smiling at dogs and fingerpicking his acoustic guitar.

Time: 16.00-16.30 KST

Title: Tackling Interpretability Problems for Audio Classification Networks with NMF

Abstract

Deep learning models have rapidly grown in their ability to address complex learning problems in various domains, including computer vision and audio. With their ever-rising impact on human experience, it has become essential to gain interpretability, i.e. human-understandable insights, into their decision process, to ensure the decisions are made ethically and reliably. We tackle two major problem settings for interpretability of audio processing networks, post-hoc and by-design interpretation. For post-hoc interpretation, we aim to interpret decisions of a network in terms of high-level audio objects that are also listenable for the end-user. This is extended to present an inherently interpretable model with high performance. To this end, we propose a novel interpreter design that incorporates non-negative matrix factorization (NMF). In particular, an interpreter is trained to generate a regularized intermediate embedding from hidden layers of a target network, learnt as time-activations of a pre-learnt NMF dictionary. Our methodology allows us to generate intuitive audio-based interpretations that explicitly enhance parts of the input signal most relevant for a network's decision. We demonstrate the method's applicability on a variety of classification tasks, including multi-label data for real-world audio and music.

Bio

Jayneel Parekh is currently a postdoctoral researcher at Sorbonne University, working with Prof. Matthieu Cord on understanding representations in large multimodal models. He completed his PhD at Telecom Paris with Prof. Florence d’Alche and Prof. Pavlo Mozharovskyi, studying interpretability in deep networks for image and audio classification. Prior to that, he obtained his bachelors and masters in electrical engineering from Indian Institute of Technology Bombay in 2019.

His research interests include developing methods for interpretable ML and concept-based representation learning for audio and visual domains. His work on audio interpretability has been recognized by Université Paris Saclay and Institut Polytechnique de Paris with 2nd prize for best doctoral project 2023 (Prix Doctorants STIC). He is also a recipient of KVPY Scholarship (2013), awarded by the Government of India.

References

[1] Leila Arras, Jose A. Arjona-Medina, Michael Widrich, Grégoire Montavon, Michael Gillhofer, Klaus-Robert Müller, Sepp Hochreiter, and Wojciech Samek. Explaining and interpreting lstms. In Explainable AI, 2019.

[2] Sebastian Bach, Alexander Binder, Grégoire Montavon, Frederick Klauschen, Klaus-Robert Müller, and Wojciech Samek. On pixel-wise explanations for non-linear classifier decisions by layer-wise relevance propagation. PLOS ONE, 10(7):1–46, 07 2015.

[3] Sören Becker, Marcel Ackermann, Sebastian Lapuschkin, Klaus-Robert Müller, and Wojciech Samek. Interpreting and explaining deep neural networks for classification of audio signals, 2019.

[4] Shreyan Chowdhury, Verena Praher, and Gerhard Widmer. Tracing back music emotion predictions to sound sources and intuitive perceptual qualities, 2021.

[5] Marco Colussi and Stavros Ntalampiras. Interpreting deep urban sound classification using layer-wise relevance propagation, 2021.

[6] Verena Haunschmid, Ethan Manilow, and Gerhard Widmer. audiolime: Listenable explanations using source separation, 2020.

[7] Saumitra Mishra, Emmanouil Benetos, Bob L. Sturm, and Simon Dixon. Reliable local explanations for machine listening, 2020.

[8] Saumitra Mishra, Bob L. Sturm, and Simon Dixon. Local interpretable model-agnostic expla- nations for music content analysis. In International Society for Music Information Retrieval Conference, 2017.

[9] Grégoire Montavon, Alexander Binder, Sebastian Lapuschkin, Wojciech Samek, and Klaus- Robert Müller. Layer-wise relevance propagation: An overview. In Explainable AI, 2019.

[10] Hannah Muckenhirn, Vinayak Abrol, Mathew Magimai-Doss, and Sébastien Marcel. Un- derstanding and Visualizing Raw Waveform-Based CNNs. In Proc. Interspeech 2019, pages 2345–2349, 2019.

[11] Francesco Paissan, Cem Subakan, and Mirco Ravanelli. Posthoc interpretation via quantization, 2023.

[12] Jayneel Parekh, Sanjeel Parekh, Pavlo Mozharovskyi, Florence d’Alché Buc, and Gaël Richard. Listen to interpret: Post-hoc interpretability for audio networks with NMF. In Alice H. Oh, Alekh Agarwal, Danielle Belgrave, and Kyunghyun Cho, editors, Advances in Neural Informa- tion Processing Systems, 2022.

[13] Verena Praher, Katharina Prinz, Arthur Flexer, and Gerhard Widmer. On the veracity of local, model-agnostic explanations in audio classification: Targeted investigations with adversarial examples, 2021.

[14] Paris Smaragdis and J.C. Brown. Non-negative matrix factorization for polyphonic music transcription. 2003 IEEE Workshop on Applications of Signal Processing to Audio and Acoustics (IEEE Cat. No.03TH8684), pages 177–180, 2003.

[15] Minz Won, Sanghyuk Chun, and Xavier Serra. Toward interpretable music tagging with self-attention, 2019.

[16] Pablo Zinemanas, Martín Rocamora, Marius Miron, Frederic Font, and Xavier Serra. An interpretable deep learning model for automatic sound classification. Electronics, 10:850, 2021.

[17] Pablo Zinemanas, Martín Rocamora, Eduardo Fonseca, Frederic Font, and Xavier Serra. Toward interpretable polyphonic sound event detection with attention maps based on local prototypes. 11 2021.